Overview of DreamGrasp

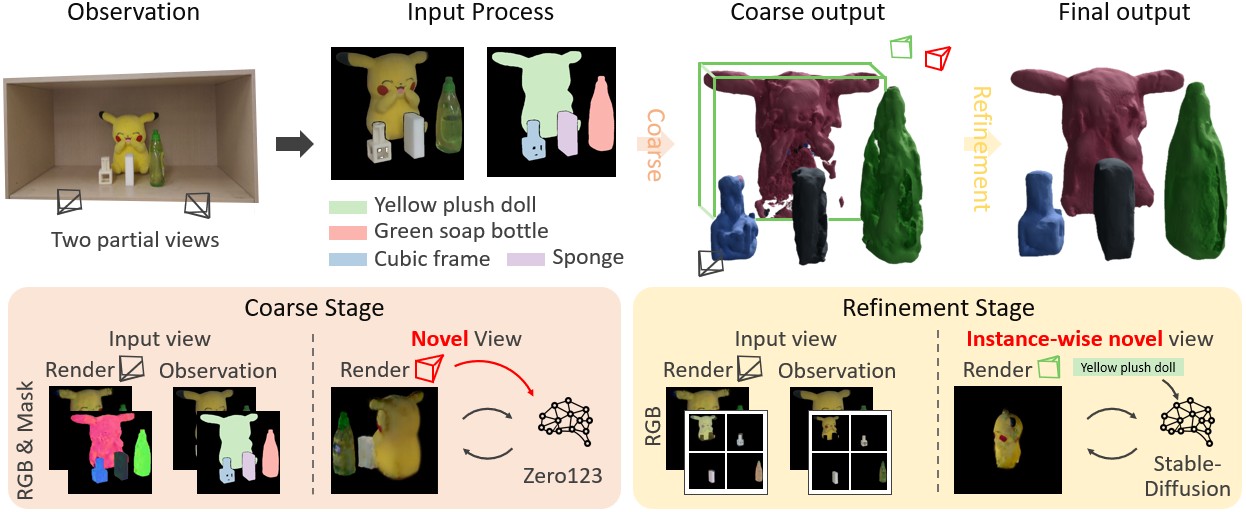

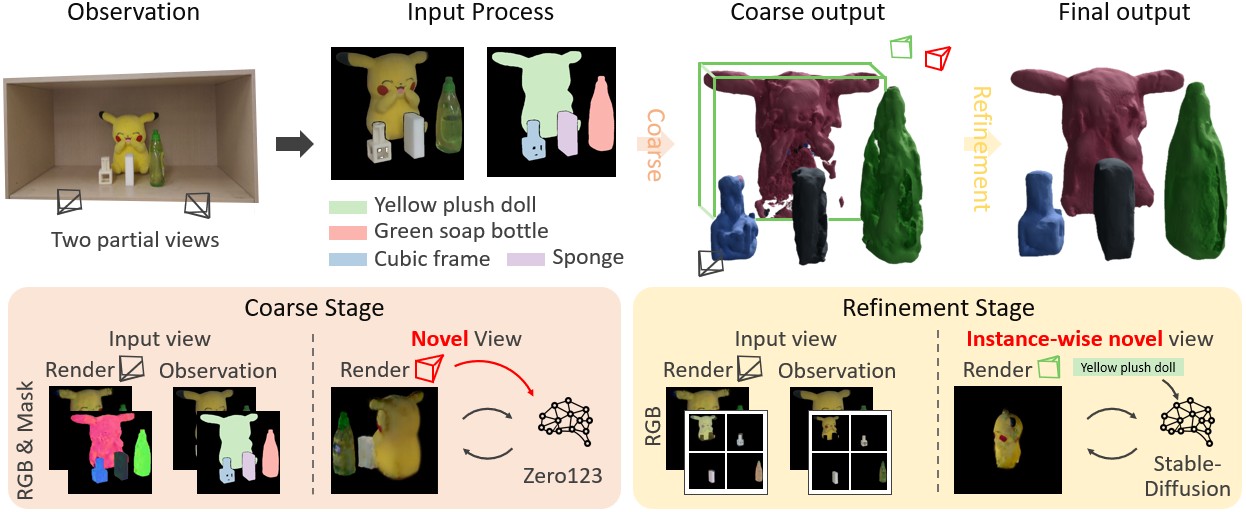

Overall pipeline of DreamGrasp

Recognition Results

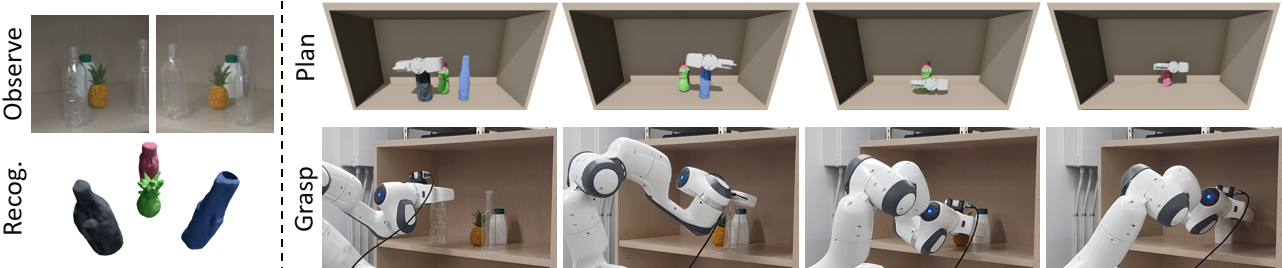

DreamGrasp effectively grasps the objects one after another without needing to re-recognize them, while also preventing any collisions with surrounding objects or the environment.

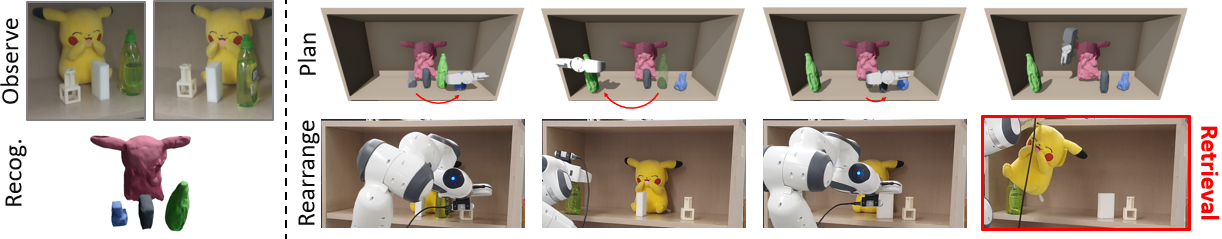

DreamGrasp is also able to effectively rearrange nearby objects and finally retrieve a target object that was initially ungraspable (e.g., a Pikachu doll).

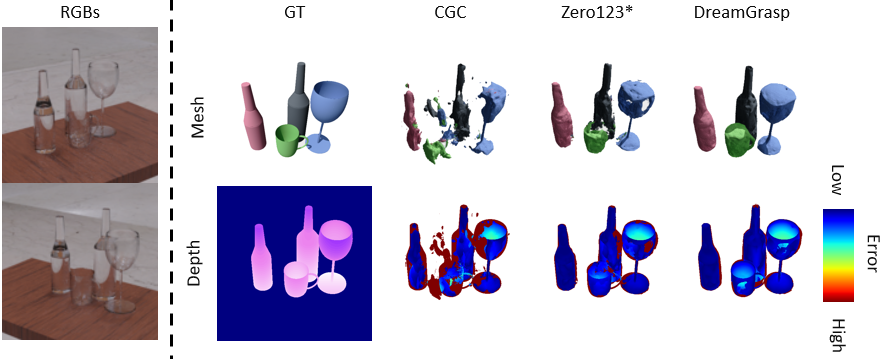

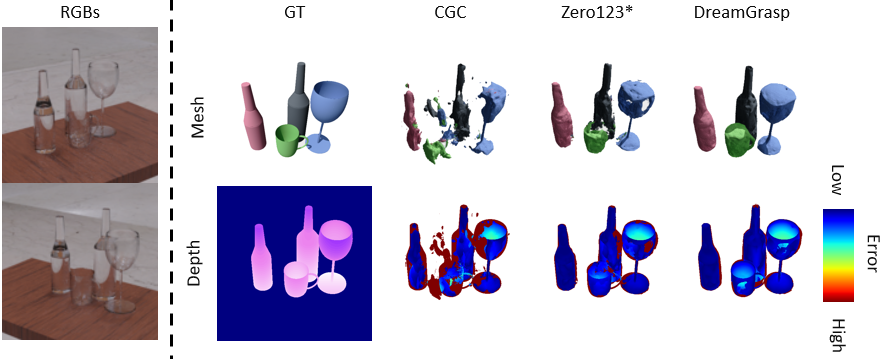

Partial-view 3D recognition -- reconstructing 3D geometry and identifying object instances from a few sparse RGB images -- is an exceptionally challenging yet practically essential task, particularly in cluttered, occluded real-world settings where full-view or reliable depth data are often unavailable. Existing methods, whether based on strong symmetry priors or supervised learning on curated datasets, fail to generalize to such scenarios. In this work, we introduce DreamGrasp, a framework that leverages the imagination capability of large-scale pre-trained image generative models to infer the unobserved parts of a scene. By combining coarse 3D reconstruction, instance segmentation via contrastive learning, and text-guided instance-wise refinement, DreamGrasp circumvents limitations of prior methods and enables robust 3D reconstruction in complex, multi-object environments. Our experiments show that DreamGrasp not only recovers accurate object geometry but also supports downstream tasks like sequential decluttering and target retrieval with high success rates.

Coming Soon...

Target Retrieval involves extracting a specific target object that is initially ungraspable due to occlusions by surrounding objects. This requires rearranging obstacles within the workspace to make the target graspable. Prior works have proposed solving this task using instance-wise 3D recognition, which requires an instance-wise grasp pose sampler, a grasp pose collision detector, and a model of rearrangement dynamics (i.e., predicting how the scene changes after rearrangement). The first two components are provided by DreamGrasp. For rearrangement, we adopt a simple pick-and-place formulation, where dynamics are approximated by moving the selected object from its current pose to a predefined placement pose. Such rearrangement dynamics would not be accessible without instance-level identification of objects.

We demonstrate that DreamGrasp provides sufficiently accurate recognition results for downstream use by executing two manipulation tasks in a cluttered shelf environment. We place various real-world objects including non-symmetric objects and transparent objects on a shelf and perform object recognition.

@article{kim2025dreamgrasp,

title={DreamGrasp: Zero-Shot 3D Multi-Object Reconstruction from Partial-View Images for Robotic Manipulation},

author={Kim, Young Hun and Kim, Seungyeon and Lee, Yonghyeon and Park, Frank Chongwoo},

journal={arXiv preprint arXiv:2507.05627},

year={2025}

}